Z-Wave device powered by batteries need regular maintenance when the battery is depleted. For a user it is very convenient to be notified whenever the battery on an individual device runs low.

Many users of Fibaro’s Home Center Light, such as me, are annoyed by the way the low battery alarming is realized. While it is very useful to get notified about low batteries, thus having the chance to charge/change them in time, the current implementation in HCL apparently is to just notify the Z-Wave battery level alarm, which is repeated every 30 minutes. When I’m travelling and cannot act immediately I end up with tens or hundreds of notifications in my inbox. In the Fibaro forum someone suggested to turn off the email notification features in the device configuration(s), but that way one risks to charge a battery time. This isn’t ideal, either. Yet another is to do some magic with LUA scenes, but unfortunately Home Center Light doesn’t allow LUA scripting (it’s an exclusive feature on the much more expensive Home Center 2).

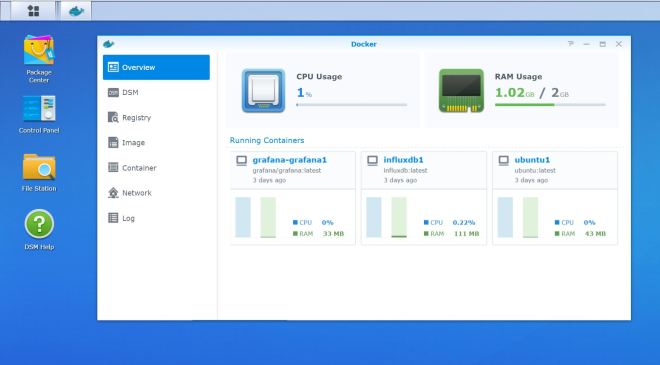

Fortunately, Fibaro is providing a REST API to access Home Center Light . It is quite easy to create a script that is triggered e.g. once a week, checks battery levels and sends out some mail when the battery level is low. The script is written in Python, which I don’t particularly like but admittedly Python is quite well established and runs out of the box on many systems. In my case, for example, it is triggered as a cron job on my NAS. It would be very simple to run it on a Windows machine using the inbuilt Task Manager. Coming back tot the script, as a bonus it automatically disables Home Center’s own email notification. As a result, I get a single email once per week in case of low battery levels.

The full script is shown at the end of this post. Let’s have a look at some relevant code snippets.

1. I have actually created a user in the HCL GUI for this particular task, which allows for somewhat granular access rights.

#

# hcl access data is defined globally

# change as needed

#

hcl_host = "192.168.0.1"

hcl_user = 'battery'

hcl_password = 'battery'

2. Here is the API call to get all parameters from all devices from HCL. The second line formats the whole thing as a list so that it can be parsed conveniently. (I’m not sure why the developers of the requests library don’t return that format directly upon a get call. Whatever.)

json_propertyvalues = requests.get("http://" + hcl_host + "/api/devices", auth=(hcl_user, hcl_password))

p = json.loads(json_propertyvalues.text)

3. Then the code cycles through the device list. If the given element contains ‚batteryLevel‘ we look further into it. If it doesn’t, just continue.

In the lower part some interesting fields of the current element are copied to local variables for further processing.

for i in range(0, l):

if 'batteryLevel' not in p[i]['properties']:

continue

properties_batteryLevel = p[i]['properties']['batteryLevel']

properties_batteryLowNotification = p[i]['properties']['batteryLowNotification']

id = p[i]['id']

name = p[i]['name']

4. Finally, check if the reported battery level is below 20% (example), and in that case do something. My script sets a Boolean and generates a string holding the names of the respective devices. That string is then sent via mail to me. Other users may prefer other means of notification.

if properties_batteryLevel <= 20:

low_battery_alert = True

5. Here is the mentioned bonus – disabling the email notifications in HCL. Note that the HCL user needs to be „admin“ for this to work. I’m not sure why but it seems that Fibaro processes PUT requests only if sent by „admin“. But since the email notifications only need to be disabled once, I guess it is acceptable to run the script as admin only once. After that the user can be changed to something more restricted, as described earlier.

if properties_batteryLowNotification == 'true': ## Disable email notifictions

print("-- Disabling stock notification....")

url = "http://" + hcl_host + "/api/devices/" + str(id)

headers={"Content-Type": "application/json"}

json_disable_batteryLowNotification = {

"properties": {

"batteryLowNotification": False

}

}

r = requests.put(url, data=json.dumps(json_disable_batteryLowNotification), headers=headers, auth=(hcl_user, hcl_password))

if(r.status_code == 200):

print("-- Done.")

else:

print("--- ONLY ADMIN USER CAN CHANGE THIS SETTING ---")

For those who are interested here is the full script:

#pip install requests OR python -m pip install requests

import requests

import json

import sys

import os

import re

from smtplib import SMTP_SSL as SMTP # this invokes the secure SMTP protocol (port 465, uses SSL)

from email.mime.text import MIMEText

#

# hcl access data is defined globally

# change as needed

#

hcl_host = "192.168.0.1"

hcl_user = 'battery'

hcl_password = 'battery'

#

# Raise in case of any low battery

#

low_battery_alert = False

low_battery_alert_s = ""

#

# Here the main script starts

# Read all device properties from HCL

#

json_propertyvalues = requests.get("http://" + hcl_host + "/api/devices", auth=(hcl_user, hcl_password))

p = json.loads(json_propertyvalues.text)

l = len(p)

#

# Cycle all devices and print out result if battery powered

#

for i in range(0, l):

if 'batteryLevel' not in p[i]['properties']:

continue

properties_batteryLevel = p[i]['properties']['batteryLevel']

properties_batteryLowNotification = p[i]['properties']['batteryLowNotification']

id = p[i]['id']

name = p[i]['name']

if properties_batteryLevel <= 20:

low_battery_alert = True

low_battery_alert_s = low_battery_alert_s + name + " " + str(properties_batteryLevel) +"\n"

if properties_batteryLowNotification == 'true': ## Disable email notifictions

print("-- Disabling stock notification....")

url = "http://" + hcl_host + "/api/devices/" + str(id)

headers={"Content-Type": "application/json"}

json_disable_batteryLowNotification = {

"properties": {

"batteryLowNotification": False

}

}

r = requests.put(url, data=json.dumps(json_disable_batteryLowNotification), headers=headers, auth=(hcl_user, hcl_password))

if(r.status_code == 200):

print("-- Done.")

else:

print("--- ONLY ADMIN USER CAN CHANGE THIS SETTING ---")

###

### Send email in case of alerts

### taken from here:

### http://stackoverflow.com/questions/64505/sending-mail-from-python-using-smtp

###

if low_battery_alert:

SMTPserver = 'mail.some-mail-service.com'

sender = 'HCL'

destination = ['someone@some-mail-service.com']

USERNAME = "user-1"

PASSWORD = "password-1"

# typical values for text_subtype are plain, html, xml

text_subtype = 'plain'

content=low_battery_alert_s

subject="HCL low battery alert"

try:

msg = MIMEText(content, text_subtype)

msg['Subject']= subject

msg['From'] = sender # some SMTP servers will do this automatically, not all

conn = SMTP(SMTPserver)

conn.set_debuglevel(False)

conn.login(USERNAME, PASSWORD)

try:

conn.sendmail(sender, destination, msg.as_string())

finally:

conn.quit()

print("Alert email sent")

except Exception as exc:

sys.exit( "mail failed; %s" % str(exc) ) # give a error message